Science

Zico Kolter Leads OpenAI’s Safety Panel to Navigate AI Risks

A professor at Carnegie Mellon University, Zico Kolter has taken on a critical role in overseeing artificial intelligence (AI) safety as chair of OpenAI’s Safety and Security Committee. This four-member panel holds the authority to stop the release of new AI systems deemed unsafe, a responsibility that has gained newfound significance following recent regulatory developments in California and Delaware.

Kolter’s committee can halt the launch of technologies that pose severe risks, including those that could be weaponized or that might negatively impact mental health. In an interview with The Associated Press, Kolter emphasized the broad spectrum of safety concerns associated with AI, stating, “Very much we’re not just talking about existential concerns here. We’re talking about the entire swath of safety and security issues.”

More than a year ago, OpenAI appointed Kolter to lead its safety efforts, but his role was highlighted last week when regulatory agreements were announced. These agreements, made in conjunction with California Attorney General Rob Bonta and Delaware Attorney General Kathy Jennings, outline that safety considerations must take precedence over financial interests as OpenAI transitions to a new public benefit corporation model.

Importance of Safety in AI Development

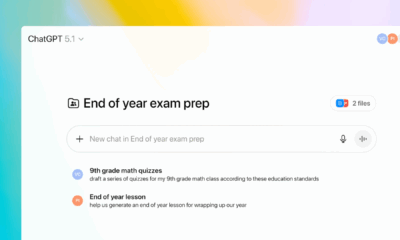

OpenAI has been at the forefront of AI innovation since its inception as a nonprofit research lab in 2015. The organization aims to develop AI that is beneficial to humanity. However, the rapid commercialization of its products, particularly with the launch of ChatGPT, has raised concerns about the safety of these technologies. Critics argue that OpenAI has prioritized speed over thorough safety evaluations, especially after the temporary removal of CEO Sam Altman in 2023 exposed internal divisions regarding its mission.

The recent agreements aim to rebuild trust by ensuring that safety protocols are integrated into OpenAI’s operations. Kolter’s committee will have the power to request delays in the release of AI models until necessary safety measures are implemented. He will also have full observation rights at for-profit board meetings but will not serve on the for-profit board itself. This structure aims to maintain a clear line of oversight while allowing for the pursuit of profitability.

Kolter remarked that the panel’s existing authority remains intact despite the organizational changes. He noted that the committee’s discussions will include a wide range of concerns, from cybersecurity risks associated with AI agents to the psychological impacts of interactions with AI systems.

Addressing Emerging AI Challenges

The scope of issues Kolter and his team will address is extensive. He highlighted potential threats, such as the risk of AI encountering malicious content online, which could lead to data breaches. Furthermore, he pointed out the unique challenges posed by new AI models that could empower malicious users to execute sophisticated cyberattacks or even develop bioweapons.

Another pressing concern is the influence of AI on mental health. Kolter stated, “The impact to people’s mental health, the effects of people interacting with these models and what that can cause. All of these things, I think, need to be addressed from a safety standpoint.” This perspective is particularly relevant in light of ongoing discussions about the responsibility of tech companies in ensuring their products do not harm users.

OpenAI recently faced criticism over the behavior of ChatGPT, including a wrongful-death lawsuit involving the family of a teenager who tragically took his life after extended interactions with the chatbot. This incident underscores the urgency of Kolter’s role in safeguarding user experiences.

Kolter, who began studying AI in the early 2000s, has witnessed significant shifts in the field. “When I started working in machine learning, this was an esoteric, niche area,” he said. His long-standing relationship with OpenAI, which includes attending its launch party in 2015, gives him a unique perspective on the rapid evolution of AI technologies.

Industry observers are closely monitoring Kolter’s efforts as he leads this critical safety initiative. Nathan Calvin, general counsel at the AI policy nonprofit Encode, expressed cautious optimism about Kolter’s leadership. He stated, “I think he has the sort of background that makes sense for this role. He seems like a good choice to be running this.” Calvin emphasized the importance of the new commitments made by OpenAI, stating that they could be “a really big deal if the board members take them seriously.”

As OpenAI navigates its transition to a for-profit model, Kolter’s oversight will be instrumental in ensuring that safety remains a priority. The effectiveness of his committee in addressing emerging risks will be pivotal in shaping the future of AI technology and its impact on society.

-

Technology4 months ago

Technology4 months agoDiscover the Top 10 Calorie Counting Apps of 2025

-

Health2 months ago

Health2 months agoBella Hadid Shares Health Update After Treatment for Lyme Disease

-

Health3 months ago

Health3 months agoErin Bates Shares Recovery Update Following Sepsis Complications

-

Technology3 weeks ago

Technology3 weeks agoDiscover 2025’s Top GPUs for Exceptional 4K Gaming Performance

-

Technology2 months ago

Technology2 months agoElectric Moto Influencer Surronster Arrested in Tijuana

-

Technology4 months ago

Technology4 months agoDiscover How to Reverse Image Search Using ChatGPT Effortlessly

-

Technology4 months ago

Technology4 months agoMeta Initiates $60B AI Data Center Expansion, Starting in Ohio

-

Technology4 months ago

Technology4 months agoRecovering a Suspended TikTok Account: A Step-by-Step Guide

-

Health4 months ago

Health4 months agoTested: Rab Firewall Mountain Jacket Survives Harsh Conditions

-

Lifestyle4 months ago

Lifestyle4 months agoBelton Family Reunites After Daughter Survives Hill Country Floods

-

Technology3 months ago

Technology3 months agoUncovering the Top Five Most Challenging Motorcycles to Ride

-

Technology4 weeks ago

Technology4 weeks agoDiscover the Best Wireless Earbuds for Every Lifestyle