Science

ETRI Proposes New Standards for AI Safety and Consumer Trust

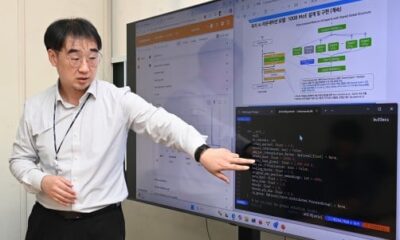

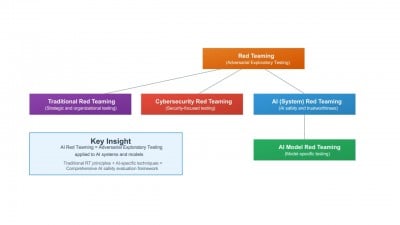

The Electronics and Telecommunications Research Institute (ETRI) has announced the proposal of two new standards aimed at enhancing the safety and trustworthiness of artificial intelligence (AI) systems. The proposed standards, named the AI Red Team Testing and the Trustworthiness Fact Label (TFL), have been submitted to the International Organization for Standardization (ISO/IEC) for consideration and development.

The AI Red Team Testing standard is designed to proactively identify potential risks in AI systems before they can cause harm. By simulating various attack scenarios and vulnerabilities, this standard seeks to create a safer framework for AI deployment across industries. This proactive approach is crucial as AI technologies continue to permeate various sectors, raising concerns about their reliability and safety.

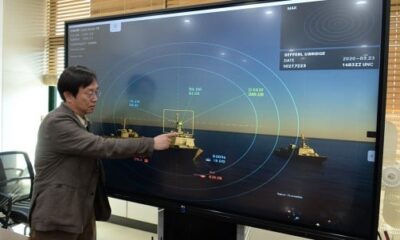

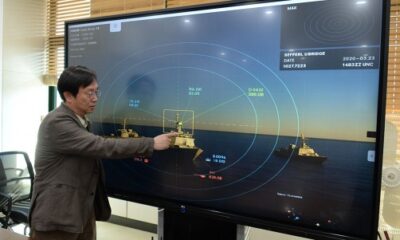

In conjunction with risk assessment, the Trustworthiness Fact Label (TFL) aims to provide consumers with a clear understanding of the authenticity and reliability of AI applications. This label will serve as a guide, allowing users to make informed decisions regarding the AI systems they choose to engage with. The TFL is expected to enhance transparency in the AI market, fostering greater consumer confidence.

ETRI, based in South Korea, is leading this initiative as part of its broader commitment to establishing international benchmarks for AI safety. The institute emphasizes the importance of developing standards that not only address technical specifications but also prioritize user trust in the burgeoning field of artificial intelligence.

As AI technologies continue to evolve rapidly, the initiative by ETRI highlights a growing recognition among stakeholders of the need for robust frameworks that ensure ethical and responsible use. The standards are anticipated to play a significant role in guiding both developers and users toward safer AI practices.

The proposal is timely, considering the increasing scrutiny on AI systems and the potential implications of their deployment in everyday life. By working with ISO/IEC, ETRI aims to set a global standard that can be adopted across various jurisdictions, promoting a unified approach to AI safety and trustworthiness.

The development of these standards will be closely monitored by industry experts and policymakers alike, as they could significantly influence future regulations and practices surrounding AI technologies. ETRI’s proactive measures are a step toward addressing public concerns about AI and ensuring that technological advancements are aligned with societal values and expectations.

In conclusion, the introduction of the AI Red Team Testing standard and the Trustworthiness Fact Label (TFL) represents a crucial advancement in the effort to create safer and more reliable AI systems. As discussions progress within the ISO/IEC, the global community will be watching closely to see how these standards will shape the future landscape of artificial intelligence.

-

Science1 month ago

Science1 month agoNostradamus’ 2026 Predictions: Star Death and Dark Events Loom

-

Technology2 months ago

Technology2 months agoOpenAI to Implement Age Verification for ChatGPT by December 2025

-

Technology7 months ago

Technology7 months agoDiscover the Top 10 Calorie Counting Apps of 2025

-

Health5 months ago

Health5 months agoBella Hadid Shares Health Update After Treatment for Lyme Disease

-

Health5 months ago

Health5 months agoAnalysts Project Stronger Growth for Apple’s iPhone 17 Lineup

-

Technology5 months ago

Technology5 months agoElectric Moto Influencer Surronster Arrested in Tijuana

-

Education5 months ago

Education5 months agoHarvard Secures Court Victory Over Federal Funding Cuts

-

Health5 months ago

Health5 months agoErin Bates Shares Recovery Update Following Sepsis Complications

-

Technology7 months ago

Technology7 months agoMeta Initiates $60B AI Data Center Expansion, Starting in Ohio

-

Technology6 months ago

Technology6 months agoDiscover How to Reverse Image Search Using ChatGPT Effortlessly

-

Science4 months ago

Science4 months agoStarship V3 Set for 2026 Launch After Successful Final Test of Version 2

-

Technology7 months ago

Technology7 months agoRecovering a Suspended TikTok Account: A Step-by-Step Guide