Technology

Paperless-ngx and Local LLM Transform Document Management

Recent developments in document management technology have significantly enhanced the way users handle their paperwork. The integration of Paperless-ngx with local language models (LLMs) like Ollama has streamlined the process of digitizing and organizing documents, invoices, and receipts. This combination is proving to be a game-changer for individuals managing extensive collections of paperwork.

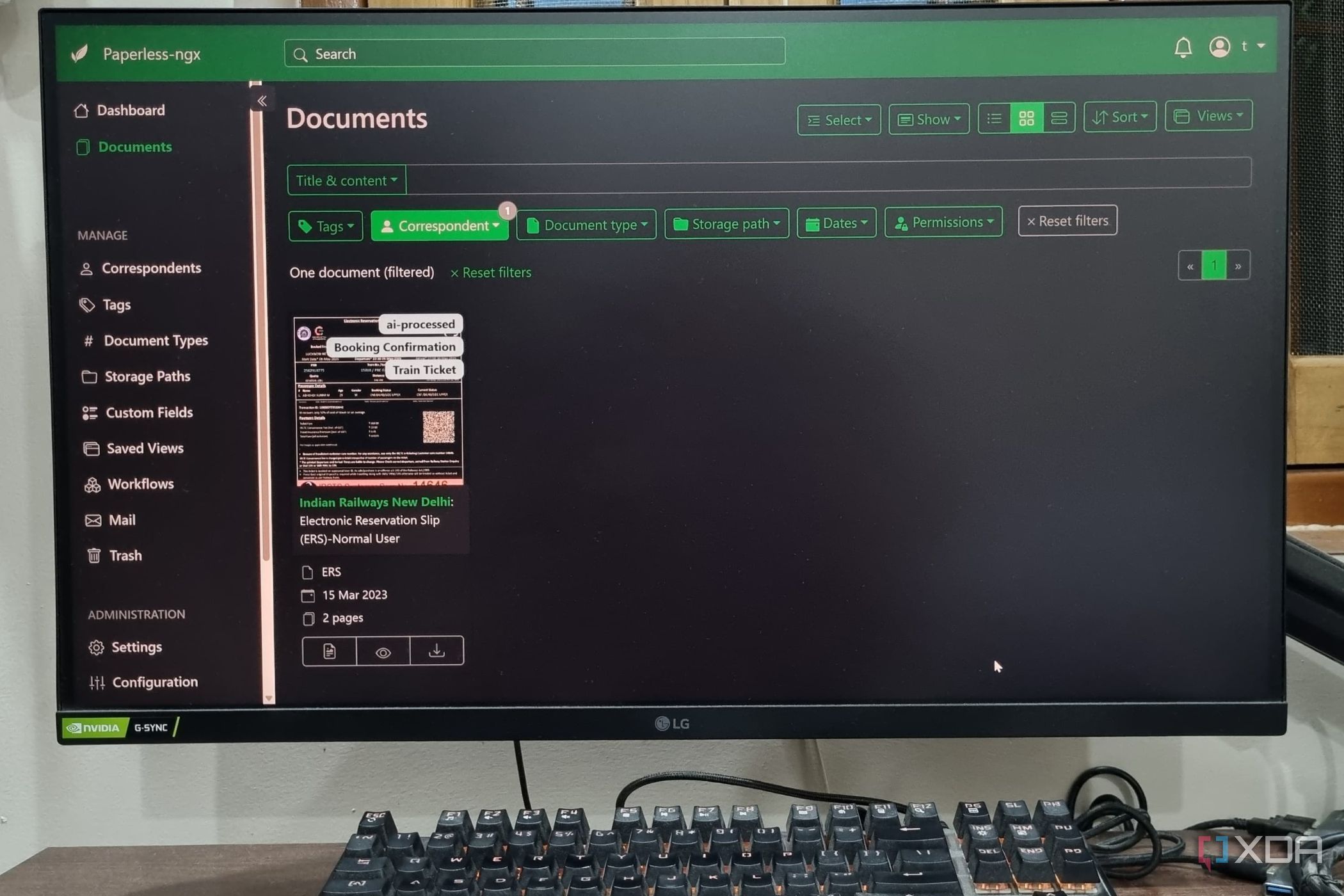

Paperless-ngx serves as a self-hosted application that allows users to digitize their documents efficiently. With the capability to auto-tag files, it simplifies the categorization process, making it easier to locate specific documents. While physical copies have their value, a majority of documents exist in digital formats such as images or PDFs. This reality highlights the necessity for robust digital management tools.

Challenges of Traditional Document Management

Despite its advantages, users of Paperless-ngx often encounter challenges as their database expands. Tagging documents is a vital feature that enhances sorting capabilities, but as the number of files increases, even the most organized systems can become unwieldy. For instance, finding a document related to a particular project may require remembering specific details, which can be time-consuming if the files are not named correctly.

Moreover, extracting necessary information from lengthy documents can be labor-intensive. A user might need to sift through several pages to find specific information, such as rates in a contract for a construction project. This process becomes tedious and inefficient, underscoring the need for advanced solutions.

Enhancing Workflow with Local LLM Integration

The introduction of Paperless AI, which utilizes a local LLM, addresses these challenges effectively. This integration allows users to search through their entire document collection without needing to recall exact names or tags. Users can also initiate AI-driven functions to summarize documents or provide insights into their contents, significantly reducing the time spent on information retrieval.

Paperless AI features two primary chat modes. The Retrieval-Augmented Generation (RAG) chat mode is particularly useful, as it retrieves related documents based on a single query. For example, a simple request like “show me the false ceiling rates” can yield accurate results, complete with a brief explanation of each document. Alternatively, the standard chat mode allows for in-depth analysis of individual files, making it easier to understand complex documents.

A user can also select a document for manual processing, enhancing the interaction with the AI. This flexibility is crucial for those dealing with extensive documentation, as it allows for detailed reviews of specific files without the need for exhaustive reading sessions.

Upon setting up Paperless AI, users are prompted to choose default configurations, such as marking all processed documents with an AI tag. This automation ensures that new files uploaded to the Paperless-ngx server are processed seamlessly in the background, with users able to monitor processing status directly from the dashboard. This interface offers a user-friendly experience, with minimal textual clutter, allowing for easy navigation and management of documents.

Local Processing for Enhanced Security and Efficiency

One significant advantage of using a local LLM is the elimination of reliance on external APIs, which can be costly over time. For users with capable systems—like those equipped with an RTX 3060 graphics card—Ollama can run efficiently without requiring a subscription or constant internet connectivity. This local processing not only improves speed but also enhances data security, as sensitive documents remain on the user’s machine without being uploaded to external servers.

The integration of Paperless-ngx and Paperless AI represents a significant advancement in document management, particularly for users handling large volumes of paperwork. The combination of automatic processing and local LLM technology offers a cost-effective solution that prioritizes efficiency and security.

In conclusion, the adoption of these technologies is reshaping how individuals manage their documents. With the ability to self-host and process data locally, users can preserve valuable information while maintaining control over their digital environment. The benefits of integrating Paperless AI with Paperless-ngx are evident, making it an indispensable tool for anyone looking to streamline document management.

-

Technology4 months ago

Technology4 months agoDiscover the Top 10 Calorie Counting Apps of 2025

-

Health2 months ago

Health2 months agoBella Hadid Shares Health Update After Treatment for Lyme Disease

-

Health3 months ago

Health3 months agoErin Bates Shares Recovery Update Following Sepsis Complications

-

Technology3 weeks ago

Technology3 weeks agoDiscover 2025’s Top GPUs for Exceptional 4K Gaming Performance

-

Technology2 months ago

Technology2 months agoElectric Moto Influencer Surronster Arrested in Tijuana

-

Technology4 months ago

Technology4 months agoDiscover How to Reverse Image Search Using ChatGPT Effortlessly

-

Technology4 months ago

Technology4 months agoMeta Initiates $60B AI Data Center Expansion, Starting in Ohio

-

Technology4 months ago

Technology4 months agoRecovering a Suspended TikTok Account: A Step-by-Step Guide

-

Health4 months ago

Health4 months agoTested: Rab Firewall Mountain Jacket Survives Harsh Conditions

-

Lifestyle4 months ago

Lifestyle4 months agoBelton Family Reunites After Daughter Survives Hill Country Floods

-

Technology3 months ago

Technology3 months agoUncovering the Top Five Most Challenging Motorcycles to Ride

-

Technology4 weeks ago

Technology4 weeks agoDiscover the Best Wireless Earbuds for Every Lifestyle