Science

AI Collaboration: Bridging Gaps Between Human and Machine Insight

On October 23, 2025, Aaron Roth, a professor of computer and cognitive science at the University of Pennsylvania, delivered a talk titled “Agreement and Alignment for Human-AI Collaboration” at the Whiting School of Engineering. This presentation explored the findings from three significant research papers addressing the intersection of human decision-making and artificial intelligence (AI).

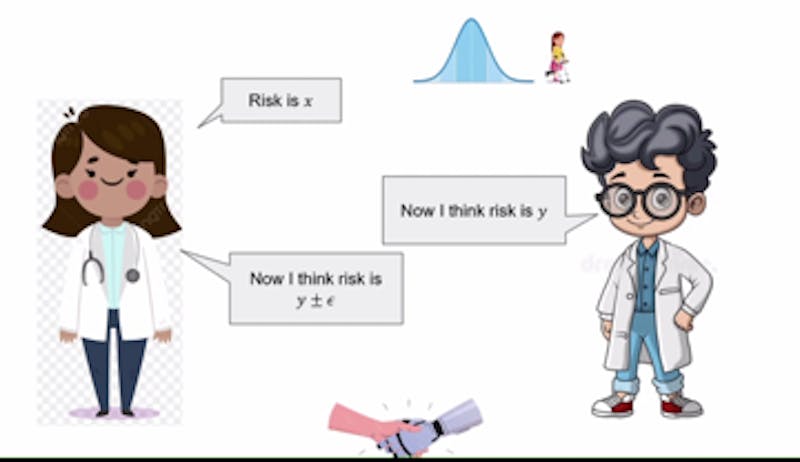

As AI technology continues to permeate various sectors, researchers are increasingly focused on how these systems can assist humans in making critical decisions. Roth illustrated this point with an example from healthcare, where AI could support doctors in diagnosing patients. In this scenario, the AI generates predictions based on various factors, such as previous diagnoses and symptoms, which the physician then reviews. This collaborative approach allows doctors to either agree or disagree with the AI’s insights based on their clinical experience and patient evaluation.

Understanding Agreement in AI Systems

Roth emphasized that in instances of disagreement between the AI and the doctor, both parties can engage in a process of iterative dialogue. This exchange allows each side to refine their perspectives based on the other’s insights until a consensus is reached. The concept behind this collaborative effort is known as a common prior, where both the AI and the human start with shared assumptions about the situation, despite having different pieces of information. Under these conditions, the two are considered to embody Perfect Bayesian Rationality.

Despite its theoretical appeal, Roth acknowledged the challenges posed by achieving a common prior. The complexity of real-world scenarios, particularly in multi-dimensional issues like hospital diagnostic codes, can complicate the agreement process. He introduced the concept of calibration, likening it to a method for evaluating the accuracy of a weather forecast. Roth explained, “You can sort of design tests such that they would pass those tests if they were forecasting true probabilities.” Calibration, in this context, is vital for establishing a reliable basis for agreement between human and AI.

Addressing Misalignment and Market Competition

Roth further examined the implications of misalignment in goals between AI systems and human operators. For instance, if an AI is developed by a pharmaceutical company, its recommendations may inadvertently favor the company’s products over more suitable treatments. To counteract this potential bias, Roth suggested that doctors consult multiple large language models (LLMs) from different companies. This approach would encourage competition among AI providers, prompting them to enhance the accuracy and reliability of their models.

Ultimately, Roth concluded that while real probabilities—which accurately depict the complexities of the world—are ideal, they are not always necessary for effective decision-making. Instead, it is often sufficient for probabilities to remain unbiased within a specific framework. By leveraging data analysis and calibration techniques, doctors and AI can work together to reach informed agreements regarding diagnoses and treatment options, thus improving patient care.

The dialogue initiated by Roth’s talk highlights the evolving landscape of AI in healthcare and the potential for collaborative systems to enhance human decision-making. As AI continues to advance, the focus on effective human-AI partnerships will be crucial in navigating the complexities of various fields.

-

Technology5 months ago

Technology5 months agoDiscover the Top 10 Calorie Counting Apps of 2025

-

Health3 months ago

Health3 months agoBella Hadid Shares Health Update After Treatment for Lyme Disease

-

Health3 months ago

Health3 months agoErin Bates Shares Recovery Update Following Sepsis Complications

-

Technology4 months ago

Technology4 months agoDiscover How to Reverse Image Search Using ChatGPT Effortlessly

-

Technology1 month ago

Technology1 month agoDiscover 2025’s Top GPUs for Exceptional 4K Gaming Performance

-

Technology3 months ago

Technology3 months agoElectric Moto Influencer Surronster Arrested in Tijuana

-

Technology5 months ago

Technology5 months agoMeta Initiates $60B AI Data Center Expansion, Starting in Ohio

-

Technology5 months ago

Technology5 months agoRecovering a Suspended TikTok Account: A Step-by-Step Guide

-

Health4 months ago

Health4 months agoTested: Rab Firewall Mountain Jacket Survives Harsh Conditions

-

Lifestyle5 months ago

Lifestyle5 months agoBelton Family Reunites After Daughter Survives Hill Country Floods

-

Technology4 months ago

Technology4 months agoHarmonic Launches AI Chatbot App to Transform Mathematical Reasoning

-

Health3 months ago

Health3 months agoAnalysts Project Stronger Growth for Apple’s iPhone 17 Lineup